Tel: +44 (0) 1524 592907 E-mail enquiries

| 'Toolkit' Structure: Types of information | What is evaluation? | Terminology |

| Background and overview of the ECB 'toolkit' | 'Toolkit' Materials |

Evaluation Capacity Building ECB 'Toolkit'

The ECB ‘toolkit’ seeks to:

- complement the guidance provided by HEFCE regarding evaluation and in particular details about the collection of 'core participant data' (see 1A: A Summary of HEFCE Evaluation Guidance pdf 470kB)

- outline the steps taken during the development of an evaluation plan

- offer ideas for how to incorporate the core features in an evaluation plan and subsequent evaluations

- provide materials to support evaluation planning including evaluation methods

- list existing evaluation resources and suggest how they might be used when developing an evaluation plan and undertake subsequent evaluations.

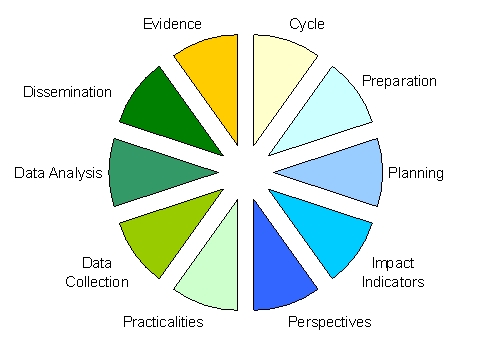

‘Toolkit’ Structure: Ten features of evaluation

The ‘toolkit’ outlines a number of activities which connect to ten ‘features’ of the evaluation process. Although the order and content of each feature is not fixed, for the purposes of linking activities, information, presentations and websites to the ‘toolkit’ we have numbered each feature.

| 1 | Evaluation Cycle: | generating, implementing and revising a plan, reviewing future action | |

| 2 | Evaluation Preparation: | situational analysis of existing evaluation and data already collected | |

| 3 | Evaluation Planning: | a framework for evaluation planning, asking RUFDATA questions to help determine the content of the plan | |

| 4 | Evaluation Impact Indicators: | levels of impact and effect, plus Enabling, Process and Outcomes indicators | |

| 5 | Evaluation Perspectives: | outlines ways of collaborating for evaluation and the internal and external rationale for evaluation | |

| 6 | Evaluation Practicalities: | collecting the data, sharing data and other methodological and ethical considerations | |

| 7 | Evaluation Data Collection: | participant data, progression and attainment, how to guides on qualitative data | |

| 8 | Evaluation Data Analysis: | links between quantitative and qualitative data, how to analyse qualitative data | |

| 9 | Evaluation Dissemination: | ways of sharing findings with different audiences | |

| 10 | Evaluation Evidence: | evaluation plans and reports, ideas for making an evidence-based case |

Some features address underpinning principles which are particularly useful for individuals or groups who wish to understand the wider context or appreciate some of the connections between each feature within the evaluation process. Other features focus on practical considerations and include a selection of different activities from which you can select which seems most suitable to your context and the time you have available. The unique context for each HE institution and Aimhigher Partnership means that you may have already covered some of the activities within the initial features.

'Toolkit' structure: Types of information

The ‘toolkit’ will include 5 types of information that will help you assemble your evaluation plan. For most stages / steps of the process there will be additional information and optional activities that some or all of the team involved in producing the plan may want to read or follow up. The core stages / steps in the process are outlined in the section entitled ‘things to do’.

|

Things to do In each section of the ‘toolkit’ there will be a list of steps to take in order to produce your evaluation plan |

| Activity These will be available on the website (or appendix) and will provide instructions or templates for conducting an individual or group activity |

|

| Information These will provide additional information or refer to other resources that are available on the specific topic |

|

| Presentation This will include a series of PowerPoint slides for use within institutions. These presentations will often provide a summary of key points on a particular topic. |

|

| Website This will include pdf documents with details of external websites on the particular topic and will be listed on the ECB website for widening participation practitioners, the exact format has still to be determined. |

See ECB ‘Toolkit’ Materials for a full list of resources. The initial number of each resource relates to the number of that feature e.g. all Evaluation Audit resources begin with 3. Each resource is then given a letter A, B or C to provide a unique identification label.

Background and overview of the ECB 'toolkit'

This ‘toolkit’ builds on the findings from the HEFCE ‘widening participation capacity building in evaluation’ project undertaken by CSET, Lancaster University. It provides details of the tools, approaches and processes piloted during 10 HE institutions and 2 Aimhigher Partnership consultancies, together with feedback from participants attending capacity building workshops held during January to June 2008.

The importance of localised evaluation to complement a national programme of evaluation is acknowledged by the funding bodies (HEFCE, DIUS and DCSF) who for reasons of accountability, value for money and future policy decisions understandably seek to establish a convincing evidence base that allows them and others to feel confident that there is a reasonable link between widening participation interventions and the awareness, attitudes and actions of individual participants and institutional stakeholders. Evaluation of a complex agenda such as widening participation with its multiple stakeholders, participants and interventions is not a scientific endeavour and consequently is never going to generate a causal proof. At best, evaluation will produce an evidence case akin to that generated in a court of law where evaluators will make a claim for probable and possible connections that others judge is reasonable.

HEFCE have issued explicit guidance for Aimhigher Partnerships to produce an evaluation plan by July 2008, and HE institutions are actively encouraged to develop their own evaluation plans that will enable them to disseminate the evidence emerging from evaluation of their widening participation activity. Each HE institution will engage in a complex set of widening participation activities determined by their history, geography, student profile and institutional strategy. Although widening participation activity covers pre and post arrival activity the current focus for evaluation plans relates to work which may be funded by HEFCE widening participation premium, fees income for outreach identified in institutional Access Agreement as well as funding received from involvement in Aimhigher partnerships or external funding for specific widening participation interventions.

Evaluation 'toolkit'

The image of a ‘toolkit’ is open to interpretation and is only one of a host of descriptions for this type of resources. Alternative terms which others have used include: guidance, handbook, manual, and framework. The resources contained within the ‘toolkit’ are available in a host of formats including books, journals, presentations and websites. The idea of a ‘toolkit’ allows the user to select and use the tool according to their context. It is for this reason that there are multiple resources available for most features of the evaluation process. We acknowledge that some prefer to follow a set of instructions, more akin to a ‘how to’ guide. Personal preference will determine if this ‘how to’ guide is viewed as a:

- recipe book – with the evaluation features (described below) representing the ingredients and method of producing the evaluation meal;

- a car journey – with the evaluation features (described below) representing the map or route to follow, the places to include on the journey and the postcards showing where you’ve been and what you’ve found out;

- a DIY self-assembly diagram – with the evaluation features (described below) representing a list of items, with directions and diagrams to guide you producing your table, chair or fully fitted kitchen!

No doubt, other metaphors exist, and those who like working in this way may choose to devise their own. It is, however, important to remember that although evaluation is a creative process and it also needs to be rigorous. When devising this ‘toolkit’ we have attempted to provide both the tools and the guidance about how, when and why to use them. As with any good ‘toolkit’, there will always be new tools needed and new tools becoming available, and inevitably many benefits in sharing tools and offering ideas for how to use them. It is for this reason that resources will be located on an evaluation website, which we hope to build on in the future.

What is evaluation?

For the purposes of this evaluation ‘toolkit’ we define evaluation

as:

“the purposeful gathering, analysis and discussion of evidence

from relevant sources about the quality, worth and impact of provision,

development or policy” (CSET, working definition of evaluation).

See also the AJ Associates report (Evaluation Toolkit for the Voluntary and Community Arts in N. Ireland 2004, pdf, 303kb) that provides a 1 page summary answering the question ‘what is evaluation?’ that explains the role of questions, evidence, causation, perspectives, reflection and learning with respect to evaluation (p8).

This evaluation ‘toolkit’ contains ten sections. Each presents information, ideas and illustrative examples of a particular feature of the evaluation process. The ‘toolkit’ does not attempt to re-invent the wheel and we make no apologies for directing readers to existing resources that we have used to support ‘thinking about’, ‘planning for’ and ‘doing’ evaluation, as well as materials devised for and used with the HE institutions and Aimhigher Partnerships with whom we have worked during the consultancy.

“Divergent phase” to the “convergent phase” of evaluation planning

The unique context for each HE institution and Aimhigher Partnership means that starting positions will differ and your use of different sections of the ‘toolkit’ will vary according to your starting position. In our experience there appears to be a capacity building journey that HE institutions and Aimhigher Partnerships follow as they begin to develop their evaluation plans. This involves starting small and tentatively, expanding into broader discussion of evaluation as a methodology, taking account of the politics of HE, working out an acceptable and achievable strategy and then returning again to smaller concerns about the nature of particular types of data collection strategies that might be used. It is a case of moving from a “divergent phase” to the “convergent phase”. Not everyone will be interested in all sections of the journey and this will influence their involvement and engagement with the activities.

Terminology

As with any specialist activity the world of evaluation is awash with technical terminology. We are developing a glossary of common terms that gives a definition for how we have used the word, and where appropriate a reference to other sources of explanation of the concept. It is a useful exercise to build up your own definitions and make sure those with whom you work share your understanding and interpretation. For link to existing glossaries of evaluation and research terminology see QDA Online Glossary.